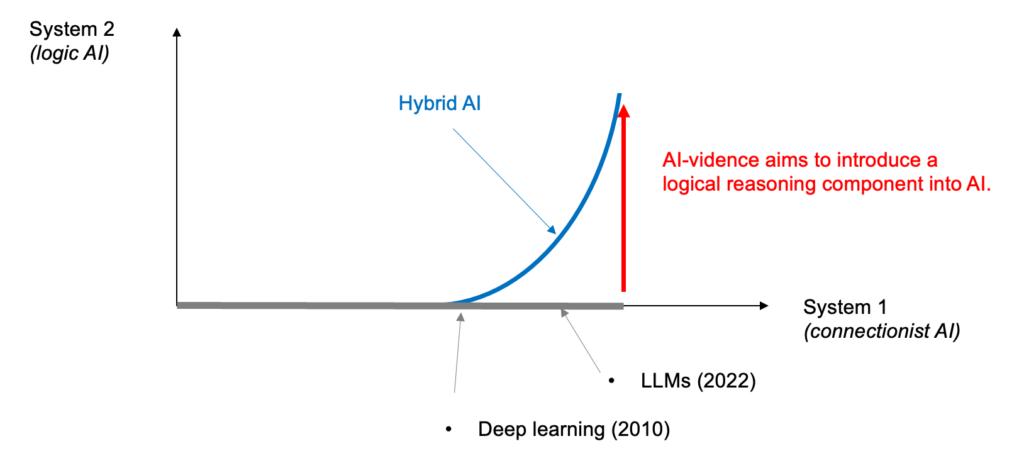

The mission of AI-vidence is to make AI more explainable. Our conviction is that it is necessary to introduce a component of logical AI (reasoning based on rules) to enable the model to be robust and provide operational guarantees. See our page on trustworthy AI.

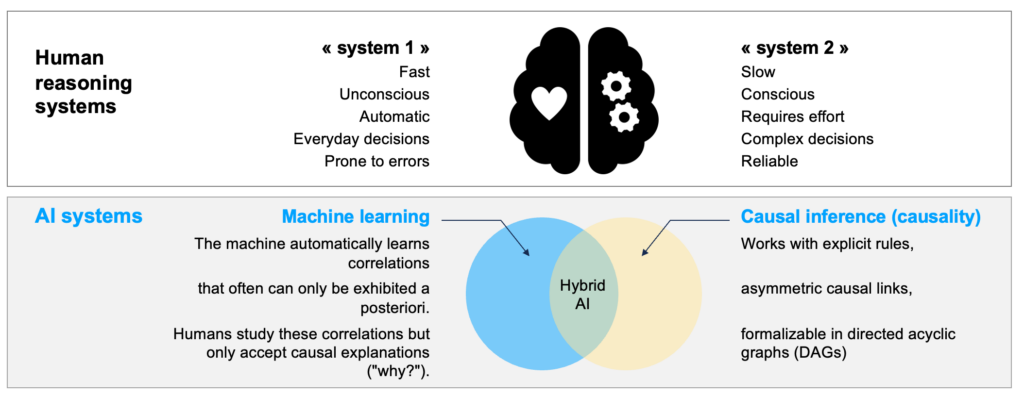

Similar to Daniel Kahneman and his systems 1 and 2, which illustrate the two modes of operation of the human brain, we believe that artificial intelligence must rely on these 2 modes: system 1, which corresponds more or less to machine learning, and system 2, which is the reasoning of logical AI.

Echoing these notions of systems 1 and 2 by D. Kahneman, AI-vidence’s ambition is to contribute to steering the runaway course of AI towards full connectionism, while considering trustworthy AI through logic.

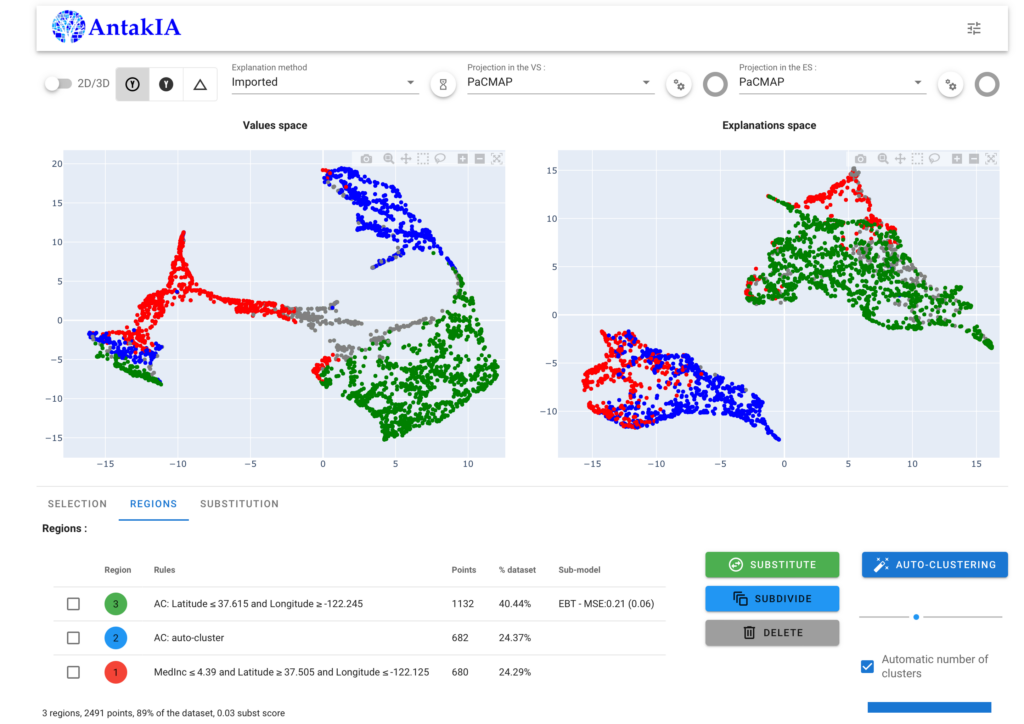

Whether dealing with simple AI models (such as regressions or decision trees) or complex ones (like deep neural networks), our approach to explainability incorporates the same key ingredients:

This approach was recognized by the ACPR during a tech sprint on explainability (read our article). It also led us to join the Confiance.ai collective.

We are a software publisher. Although we may occasionally charge for explainability consulting services, it is in order to stay close to the market, through select companies, on rapidly evolving new issues.

Our business model primarily revolves around selling licenses for our AI-vidence explainability software. We are considering several variations of our software: